Cool Inventions…

Thursday, November 8th, 2007Time magazine has announced their inventions of the year with iPhone taking top award.

Here are two amazing products….

We’re entering an exciting era of technology…. What will we see next year????

Time magazine has announced their inventions of the year with iPhone taking top award.

Here are two amazing products….

We’re entering an exciting era of technology…. What will we see next year????

In common with a lot of AJAX-based sites, ZoomIn makes a lot of use of autocompletion, specifically to make it easier and more reliable to enter addresses. But thanks to this article from Gizmodo, we can see some of the hilarious questions that come up when autocomplete kicks in too early: Ask.com helpfully starts completing a phrase such as “is it legal to” with some bizarre and sometimes hilarious queries from other users.

But my favourite is an example that shows the touching faith that some people have in the Internet. Apparently it will answer questions that have stumped philosophers since the dawn of humanity:

We’re in the process of rebuilding our server infrastructure. We’re shifting from debian sarge with postgres 7.4 to Ubuntu 7 and postgres 8. We currently investigate the stability and scalability of various rails stacks. Also we’re looking at different solutions of handling high volume static content delivery ie. delivery of our map tiles. (This could apply to any static content like thumbnails) During our research into reverse proxy alternatives, Paul Gold put me onto varnish.

Varnish is written from the ground up to be a high performance caching reverse proxy. The author built it due to his frustration at squid and he provides a detailed analysis of why squid sucks. In his own words…

Varnish is written from the ground up to be a high performance caching reverse proxy. Squid is a forward proxy that can be configured as a reverse proxy. Besides – Squid is rather old and designed like computer programs where supposed to be designed in 1980.

– Poul-Henning Kamp, Varnish architect and coder.

I’ve done a little bit of testing against lighttpd 1.4, apache 2.2, vs varnish with some surprising results.

My test involved using apache bench (ab) in a brute force test of fetching a 1k, 5k, 10k and 20k image file. I tested against 50,100, 200 concurrent users. (eg. ab -n 20000 -c 100 http://test/5kimage.jpg ) I tested against a default installation of apache 2.2 and lighttpd 1.4.12.

Here are the results:

| File Size | Concurrent Users | Apache 2.2(reqs/ sec) | Lighttpd 1.4.12(reqs/ sec) | Varnish 1.1.1(reqs/ sec) |

|---|---|---|---|---|

|

1k |

50 |

3792 |

2050 |

5386 |

|

1k |

100 |

3949 |

2135 |

5471 |

|

1k |

200 |

3973 |

1946 |

5228 |

|

5k |

50 |

2087* |

1655 |

2075* |

|

5k |

100 |

2051* |

1764 |

2076* |

|

5k |

200 |

2006* |

1764 |

2062 |

|

10k |

50 |

1063* |

1065* |

1065* |

|

10k |

100 |

1059* |

1064* |

1060* |

|

10k |

200 |

1056* |

1056* |

1055* |

|

20k |

50 |

571* |

560* |

570* |

|

20k |

100 |

569* |

560* |

564* |

|

20k |

200 |

566* |

562* |

566* |

* = Denotes network throughput was approaching 10.93Mb / sec . The size of the network connection was effectively putting a cap on the throughput.

A couple things to note from the testing. First, Apache forks a lot of processes, while lighttpd and varnish fork threads.Also, the CPU seemed to be under less load using lighttpd and varnish compared to apache.

From the results, Varnish excels at caching small files, and as fast as the other servers at higher file sizes. I want to do a bit more testing against varnish. For my next set of testing, I’ll test the webservers against 100 random images and post the results.

Here’s something for Monday morning…

37 signals have written a post about what Gordan Ramsay can teach software developers. Its a great post, with some good analogies. That made me think….

Forget Y-Combinator, forget Full Code Press, what about Hell’s Code ?!? Get developers to spend a number weeks going through a number coding challenges and eliminations until they win full funding for a start-up for a year ? Clients would wait around until the developers complete their prototypes, beta apps and full applications and give them a rating….

I thought it would be fun to imagine what Gordon Ramsay would say and do if he ran Hell’s Code ???

What would he yell at ???

I can imagine Gordon Ramsay, if he found something wrong, he’d delete the code and tell them to start again. And if the developers made too many mistakes, he shutdown the coding and send all the clients home!

What else would Gordon Ramsay do ????

Here’s a fix to a problem I had with a using an usb drive on both Mac and Windows.

Problem: A directory of files was missing from a USB drive. (NTFS formatted USB drive). But, when I browsed the disk on Mac, the directory and files were visible.

Looking at the disk properties (ie. disk space used on the drive), it showed that the files were still there. It was really strange, and a lot of googling couldn’t find the answer.

Solution: Use the dos command chkdsk -f to fix the errors on the disk. The USB disk must have got corrupted when switch between machines.

Thanks to Milton for suggesting the fix. The problem was exsaserbated because it happen which I was migrating from Windows to Mac. 🙂

I switch to a mac about 6 weeks, ago. Here’s my thoughts about the pro’s and cons of my experience.

Pro’s

Con’s

I’m really glad that I have switched. I’ll wait a bit before I install Leopard.

Following up from yesterday’s post about the Open Source Awards, here’s a new local discussion group about the use of open source software in Geographic Information Systems (GIS). As a web mapping company, we make heavy use of open source software in both the web and the mapping sides of our business: I’ll come back to the web side, but here are some quick notes on the open source GIS packages that we use.

This came as a very pleasant surprise: In last night’s NZ Open Source Awards, ProjectX won the “Open Source Use in Business” category, and NZ Summer of Code took out the “Open Source Use in Education” award.

We’ll write some more soon about the advantages we’ve found to using open source, and some specific software that we can’t do without; but for the moment we’re all just rather stunned!

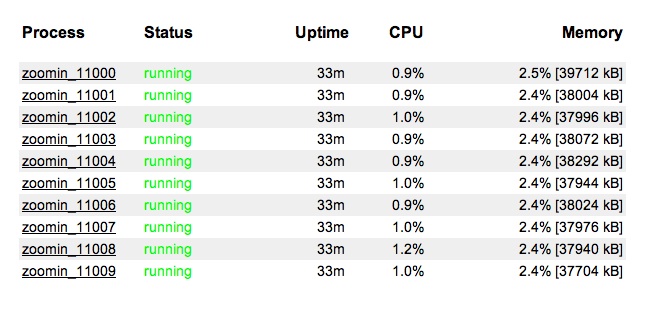

I’ve just discovered this amazingly simple process monitoring tool Monit, that will monitor, alert and restart processes if sees any trouble. We’re currently in the process of adding monit to all our production servers. I’ve been testing how sturdy is it and it seems really good, ie. I can kill a process and it will recover and it will even restart it if the process gets too big!

The configuration is in a psuedo text format. Pretty easy to understand. Here’s an edited version of our config.

check process rails_app_4000 with pidfile /path/to/rails_app/tmp/pids/mongrel.4000.pid

group mongrel

start program = “/usr/bin/mongrel_rails start -d -e production -a 127.0.0.1 -c /path/to/rails_app –user www –group www -p 4000 -P tmp/pids/mongrel.4000.pid -l /path/to/rails_app/log/mongrel.4000.log”

stop program = “/usr/bin/mongrel_rails stop -c /path/to/rails_app/ -P tmp/pids/mongrel.4000.pid”if failed host 127.0.0.1 port 4000 protocol http

with timeout 20 seconds

then restartif totalmem > 100 Mb then restart

if cpu is greater than 60% for 2 cycles then alert

#if cpu > 90% for 5 cycles then restart

#if loadavg(5min) greater than 10 for 8 cycles then restart

if 3 restarts within 5 cycles then timeout

group rails_app

Monit is “mongrel friendly” thanks to Peter Jones who has developed a tool called Bowtie to generate mongrel_cluster + apache + monit configs. Its pretty use to use.

We’re now looking at Seesaw as another tool to help with the redundancy of our rails stack . (Its cool to see an app coming out of Australia !)

We’ve known about the secret scaling powers of memcache for some time (Here’s a list of sites using it) , BUT … I have just read some slides from a presentation which provides an overview of how facebook is using memcache. (Slide 21)

Facebook uses

Wow 3 Terabytes of memcache ! – It looks like memcache is the application stack for facebook. Memcache and equivalent tools will change the way that people think about design and structure of their application. Just because you’ve got a blazingly fast memory doesn’t mean that you shouldn’t tune your database !

We’ve just noticed that there is an pgmemcache API for memcache and Postgresql. Now we have to think about where do you put your cache ? at the application level or the database level ?

FYI: The facebook team have been contributing to the memcache source and they recommend that you use v 1.2.x rather than 1.1.x . (Note Debian and ubuntu has 1.1.3 by default, you’ll need to manually install the new package)